I first wrote about how to get the most bang for your Heroku buck a year ago. Since then a few things have changed and we’ve learnt even more about how to deliver great performance from our Heroku hosted sites. Some of the advice remains the same, but there are some important changes. There is also an important caveat at the end. While this is written primarily for Rails developers using Heroku, much of it is applicable to any site hosted on any platform.

We love Heroku. It makes deployment easy and quick. However, it gets pricey when you add additional dynos at $35pm. With a bit of work you can get a lot more out of your Heroku whilst drastically improving the performance of your site for your users and providing better scalability. You might need to spend a bit on other services, but a lot less than if you simply moved the dyno slider.

There are two sides to site performance: how many requests your site can handle, and how long it takes to display in the browser. These are intimately connected but ultimately your users only care about the latter, while your boss or client probably cares more about the former. Shaving 50ms from your response time will increase your throughput, but it won’t help your users if they have 2mb of Javascript to download.

0. Before you Dive in: Measure Your Performance [New]

Remember the golden rule:

Premature optimization is the root of all evil

– Donald Knuth

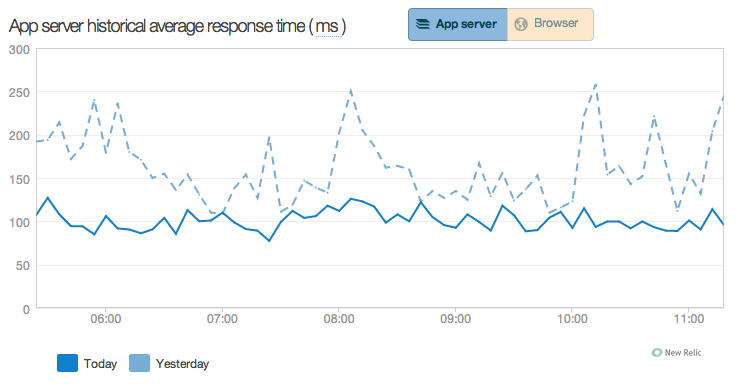

You don’t have a performance problem until you can show me a graph and some numbers. Luckily for you, that’s easily done on Heroku. The performance monitoring service New Relic is available as a free add-on for all Heroku users. Add it to your app and start digging. Not only will it help you work out the problem areas but will give you confirmation that your efforts are actually paying off (or not). Other useful tools are available in your browser. Chrome’s Developer Tools (the other browsers have an equivalent) Network and Audit views will show you exactly what happens when you load your page, and give you suggestions of ways to speed up your site respectively. The audits are especially useful for spotting caching problems.

1. Use Phusion Passenger [New]

Use Phusion Passenger for Heroku. Really, it’s awesome. Phusion Passenger is a multi-treaded application server that now runs on Heroku using Nginx. On average we manage three or four concurrent threads per dyno, depending on memory use. Passenger has several advantages over the other application servers available for Rails on Heroku.

- It’s consistently fast. I’m not convinced that it’s significantly faster than Unicorn, but it does seem to be more consistent. This may be related to its second advantage…

- It’s more memory efficient than the alternatives. While it won’t drastically reduce the memory footprint of your app, it does seem to have shrunk at least one of our apps’ total footprint by 10-15%. That’s not masses, but on Heroku, with its 512mb limit, that can make all the difference. If you breach the 512mb limit Heroku will start swapping memory out to disk, at which point performance will get much less consistent as parts of your application are moved in and out of RAM.

- Assets are served directly by Nginx, not Rails. While we still don’t want to serve lots of assets from our Heroku instance, doing so through Nginx is significantly better than doing so through the application stack.

- Finally, and significantly for your users, Passenger/Nginx support HTTP compression out of the box, for both assets and application responses. You don’t have to do anything. If the browser sends the correct Accept-Encoding header the server will respond appropriately. This can radically reduce the size of the HTML, CSS, and JavaScript sent.

2. Keep Within the Memory Limits: Put Your App on a Diet and Don’t Get Greedy with Threads [New]

One of the main limitations of a Heroku dyno is the 512mb RAM limit (1gb if you pay for a 2x dyno). Once you hit that things start getting swapped out to disk, significantly affecting performance. Requests get slower on average, and response times get more unpredictable.

New Relic can give you an insight into your memory use, on a per instance (in our case Passenger threads) and total basis. You might even be able to squeeze in an extra thread to handle more requests.

Always keep your total memory footprint below the 512mb limit if you want consistently good performance.

There are three main approaches to reducing the size of your application:

First and most obvious, remove unused code from your app and Gems from your Gemfile. If you don’t need it, it shouldn’t be there.

Secondly, be fastidious about your Gemfile groups. Make sure that gems that are only used in test, development, or asset compilation are in the relevant groups, don’t just dump everything in the default group or all of it will be automatically required at startup, consuming memory. The Rails 4 default project has done away with the :assets Gemfile group, but you can easily add it back in by editing application.rb and changing

Bundler.require(:default, Rails.env)

to

Bundler.require(*Rails.groups(:assets => %w(development test)))

Finally, if there are any gems that are used solely for background workers or rake tasks, you should manually require them where you need them, don’t auto-require them at startup.

Don’t be tempted to use too many Passenger threads if it means going over the memory limit. The increase in concurrency will probably be outweighed by an overall reduction in performance of all the threads.

The graph below shows what happened when we reduced the number of threads on an application so that its memory consumption dropped from about 530mb to about 390mb. Throughput on the site was roughly comparable. Notice how much more consistent the performance is afterwards.

3. Serve Static Assets and Uploads from a CDN on Multiple Subdomains – but Don’t Use asset_sync [Updated]

Last year I recommended using asset_sync to move your assets to S3, removing the need for your Heroku dyno to serve them. With the arrival of Passenger on Heroku this is no longer good advice. Because Passenger serves assets through Nginx and will serve the compressed versions where appropriate, serving your assets from your dyno through a CDN (content delivery network) such as Amazon Cloudfront will give your users a much better experience than asset_sync, while not increasing the load on your dyno. Because the cache expiry of your assets is set, by default, to a very long time, the number of requests that actually hit your dyno will be tiny (around once per asset per year).

To really juice up the load times of your site, configure four subdomains for your assets, numbered from 0 to 3, e.g. assets0.myapp.com to assets3.myapp.com, pointing at your asset CDN and set the following in your production configuration:

config.action_controller.asset_host = "assets%d.myapp.com"

Rails will cycle through each of these subdomains when it generates asset links. Browsers are generally restricted to only two concurrent requests per host name, so having assets served from four allows the browser to make eight concurrent requests. Page load speeds will now be constrained only by the speed of your user’s connection. If you user has a good connection then they will be able to download most of your assets in parallel.

Heroku have documentation walking you through the Cloudfront setup.

4. Turbo-Charge your Application with Memcache backed View Caching and In-app Caches [Updated]

If you’ve not encountered caching in Rails, stop reading this article right now, go read the Rails Guide to Caching and then DHH’s short guide to key based cache expiry. Caching in Rails 4 is even better, with improved support for “Russian Doll” caching.

View caching in Rails can have a profound effect on your application’s response time. In the past we have found that rendering pages, especially complex ones with lots of partials, can easily account for two-thirds of the total processing time, much more than you might expect. Use New Relic to guide your improvements.

A Memcache store is shared between your dynos so they all benefit from any cached item. The Memcachier addon gives you 25mb for free, and is pretty reasonably priced from there on up. Just adding a small cache store of 25mb can make a significant difference to the load time of your pages.

Don’t be afraid to de-normalise some of your data, where appropriate. Sometimes storing a precomputed value in a model, especially one based on complex transitive relationships with other models, makes up in performance improvement what it loses in programming purity and elegance. The most common example of this approach is ActiveRecord counter caches, but you can easily add your own.

5. Offload Complex Search to a Dedicated Provider [Unchanged]

If you have an application that needs to perform complex searches over large datasets don’t do it in your application directly. If searches regularly take a long time consider using something like Solr (available as a Heroku plugin), Amazon CloudSearch, or one of the many Search as a Service providers. You’ll not only get faster search performance, but you’ll save vast amounts of development time trying to optimise your in-app search. If search is a significant aspect of your site the cost of a good search service will probably be better value than just scaling your database.

6. Use Background Processing the Smart Way with Delayed::Job and HireFire [Unchanged]

Background processing with Delayed::Job is a great way of speeding up your web requests. Potentially slow tasks like image processing or sending signup emails can happen outside of the request-response cycle, making it much snappier and freeing up your dyno to handle more requests. The downside is that you need to run a worker dyno at $35/month.

Michael van Rooljen’s HireFire modifies Delayed::Job and Resque to automatically scale the number of worker dynos based on the jobs in the queue. Because Heroku charge by the dyno/second, spinning up 10 workers for one minute costs the same as one worker for ten minutes, so with HireFire you can potentially get things done quicker while paying less than you would if you ran a dedicated worker dyno.

HireFire does have one limitation, it only works for jobs scheduled for immediate execution. If that is an issue Michael has a HireFire service that will monitor your application for you, so jobs scheduled in the future will be run.

7. Don’t Upload and Process Files with your Web Dynos [Unchanged]

If you use something like CarrierWave or Paperclip, by default the uploading and processing of images is done by your dyno. While this is happening your dyno thread is completely tied up, unable to handle requests from any other user.

Decouple the upload process from your dyno using something like CarrierWave Direct. With a bit of client-side magic it uploads files to S3 directly, rather than through the dyno. The images then get resized by background processes using DelayedJob or Resque. This obviously has the downside that you’ll need a worker running.

Another option, which we’ve used recently, is the awesome Cloudinary service. They provide direct image uploading, on-demand image processing (including face detection, which even seems to work on cats) and a worldwide CDN all in one package. There is a free tier to get you started, and for $39 (slightly more than one Heroku dyno) their Basic plan will be more than enough for many sites.

Putting it all Together

At the end of all this we’ve freed up our Heroku dyno from doing things it’s not very good at like serving static files and uploads, and juiced up its performance when doing what it’s great at, serving Rails application requests with no sys-admin in sight.

Each technique can be easily applied to your existing applications, but if you develop with them in mind from the start you get all the benefits with almost no additional work. On their own each one will help the performance of your application, but combining them together will significantly extend the amount of time before you have to start forking out for lots more dynos, and when you do you’ll get much more bang for each of your thirty-five Heroku bucks.

If you’ve got any other tips for getting the most out of a Rails application, whether or not it’s on Heroku, we’d love to hear about it them!

Postscript: Caveat Developer

Heroku is fantastic for reducing developer overhead and with a bit of work you can serve large and popular sites on it for relatively little. We use it for many of the sites we build. However we also use other hosting platforms, especially Amazon AWS, so we can compare our experiences of the two and we’ve noticed a couple of issues.

We frequently see significant performance drops after deploying a new version. Response times sometimes treble, with all parts of the stack slowing by the same factor. Scaling the application down and then back up will often fix the problem. This is not a code issue, it can happen after deploying a change to some CSS.

No matter how minimal an app is, the best response time I’ve ever seen in the browser is about 150ms, and that’s not consistent, it’s frequently longer. Now, 150ms is pretty quick, in fact it’s about a blink of an eye, but applications we’ve hosted on single Small EC2 instances have shown consistently better performance without any optimisation. Both of these issues are probably due to a combination of Heroku’s routing infrastructure and the way your dyno shares resources with others on the same host hardware.

The differences are only in the order of 100ms or so, less than the blink of an eye, so how much it matters will depend on your use case. Constant monitoring of your application is key.

Obviously, while you get by on a single free Heroku dyno you can’t complain too much, but once you start forking out for extra dynos you might want to look at Amazon Elastic Beanstalk as an alternative. It’s still quite immature compared to Heroku (but improving all the time), and you’ll have to get your hands a bit dirty setting it up, but it gives you most of the ease of maintenance of Heroku. If you are prepared to pay up front, the cost of a single Small EC2 instance is on a par (or less) with a Heroku dyno, but gives you more memory and more consistent performance. You also get the advantages of AWS’s other services like automatic Elastic Scaling for those busy periods.

As with all such decisions, how and where you host is going to depend on what you need and how you want to spend you cash, but with a bit of work Heroku can form the core of a really good setup that will scale effortlessly, but it’s always worth keeping an eye on the other options.